Create Prompt Templates with querifai.ai

Prompt Engineering Recap

In our previous article on prompt engineering, we have introduced some basic and advanced prompt engineering techniques, like adopting a persona or few-shot learning. We also provided some examples and demonstrated how to get started with language models on querifai.ai. If you are not familiar with prompt engineering so far, we encourage you to review our prompt engineering article first.

In this article, we take up where we left off but now shift gears from the focus on prompt engineering to generating prompt templates.

Why use Prompt Templates with querifai

Efficient Storage and Reusability: Sometimes, a single word, more often a certain combination of prompt engineering techniques, can make the difference between a useless and a mind-blowing result. Saving your successful prompts in one place lets you create your private best practice library that you can rely on for future tasks. If prompt engineering is not for you, you could also ask an acquaintance to generate prompt templates for your most frequent tasks and avoid having to learn the details yourself.

Consistency and Standardization: Some tasks benefit from consistent output formats over time. Even if you know how to generate a certain prompt, you would still need to remember the exact settings of the writing style and format. Using prompt templates helps maintain consistency and saves you from reverse engineering the format you previously generated.

Customization and Adaptability: To enhance the versatility of prompt templates, you can incorporate variables that you fill in each time you use the template. Variables can be useful for changing writing style, length, formatting, or certain content aspects, while keeping the rest of the prompt unchanged. Also, you can select between different large language models from different vendors to find the right fit for your use case.

Generate Prompt Templates with querifai

To generate a prompt template, start our free trial (link here) and navigate to Language and Basic chat. Iterate over a prompt using prompt engineering techniques until you are satisfied with the outcome.

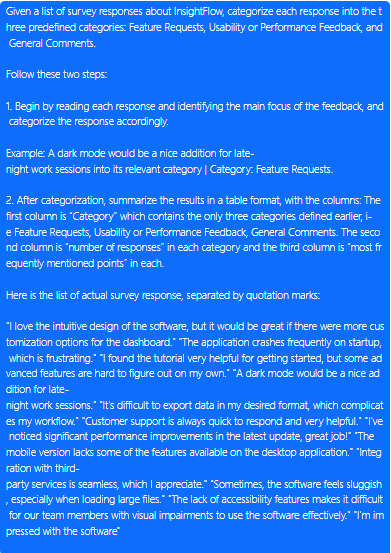

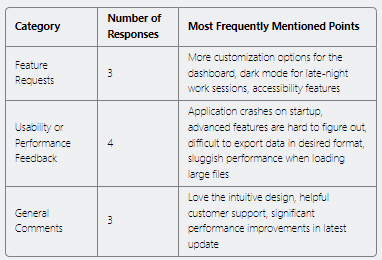

We have done just that in our previous article and will use the last example where we categorized and aggregated survey responses.

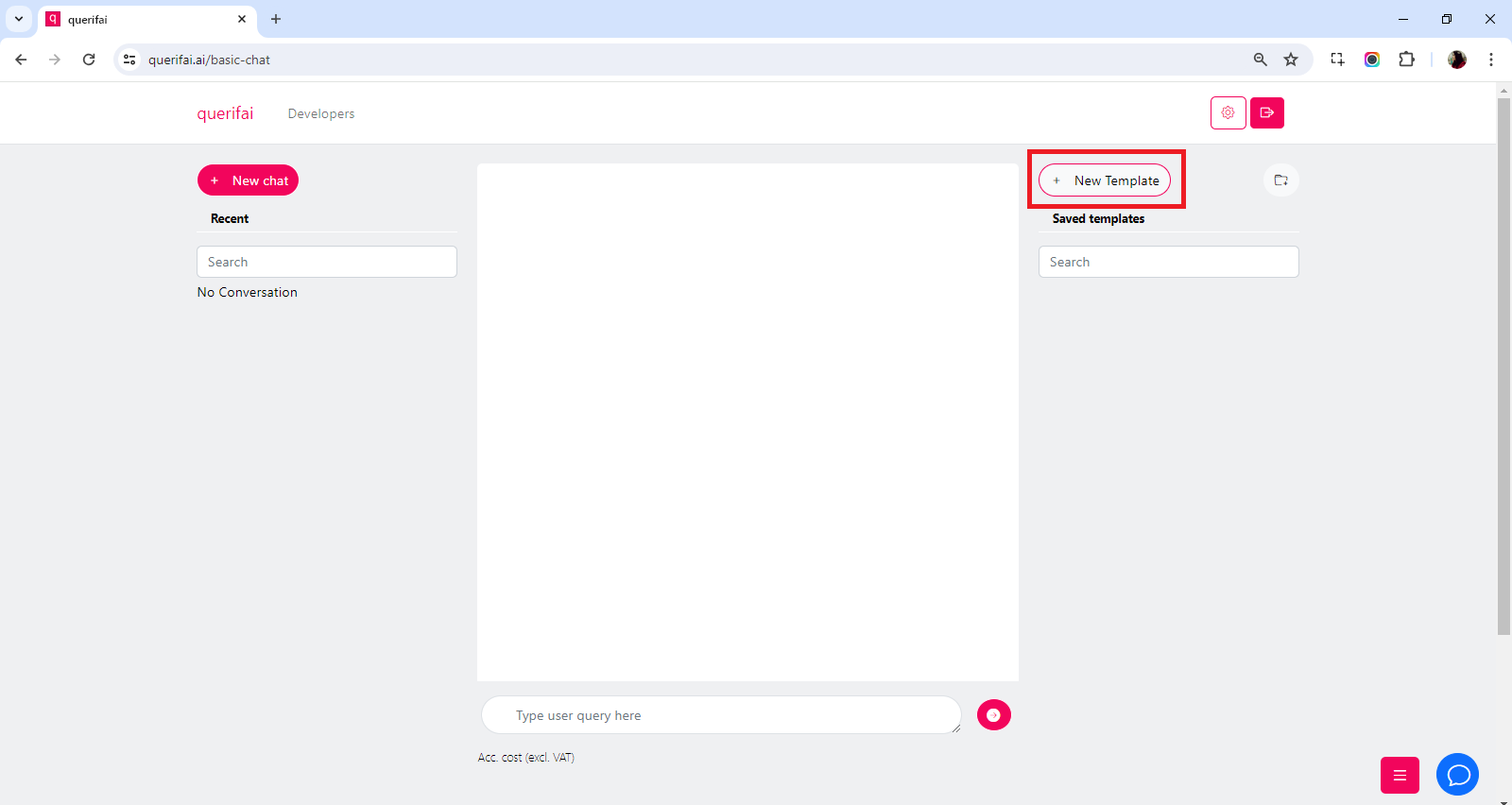

Now, let’s start generating a prompt template by clicking on New Template (see below).

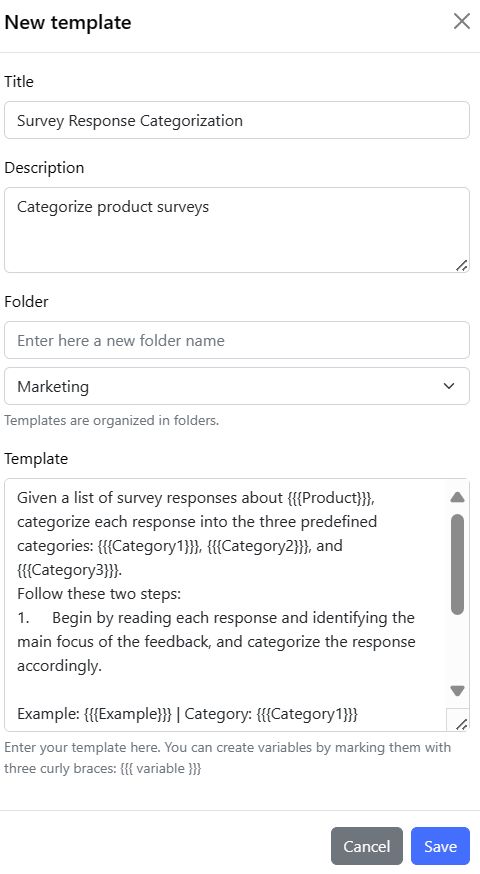

Enter the title, description, and folder (where you want to store it on querifai.ai). Enter the prompt you have created before into the “Template” section (see below).

If the prompt should stay exactly the same when you use it the next time, you’re all set.

In this scenario, however, including variables can greatly increase the usefulness of this prompt, e.g., to be able to change the categories you want to use.

To include variables, edit the prompt you created earlier by simply replacing the categories with three curly braces and a variable name per category, e.g.: {{{category1}}}.

Use this approach for all the variables you want to specify. If the same variable appears in the text several times, simply give it the same name.

For the survey responses categorization example, the prompt template with the included variables looks similar to the following (see previous article for the full example):

Once you click “Save”, your prompt template is ready to use.

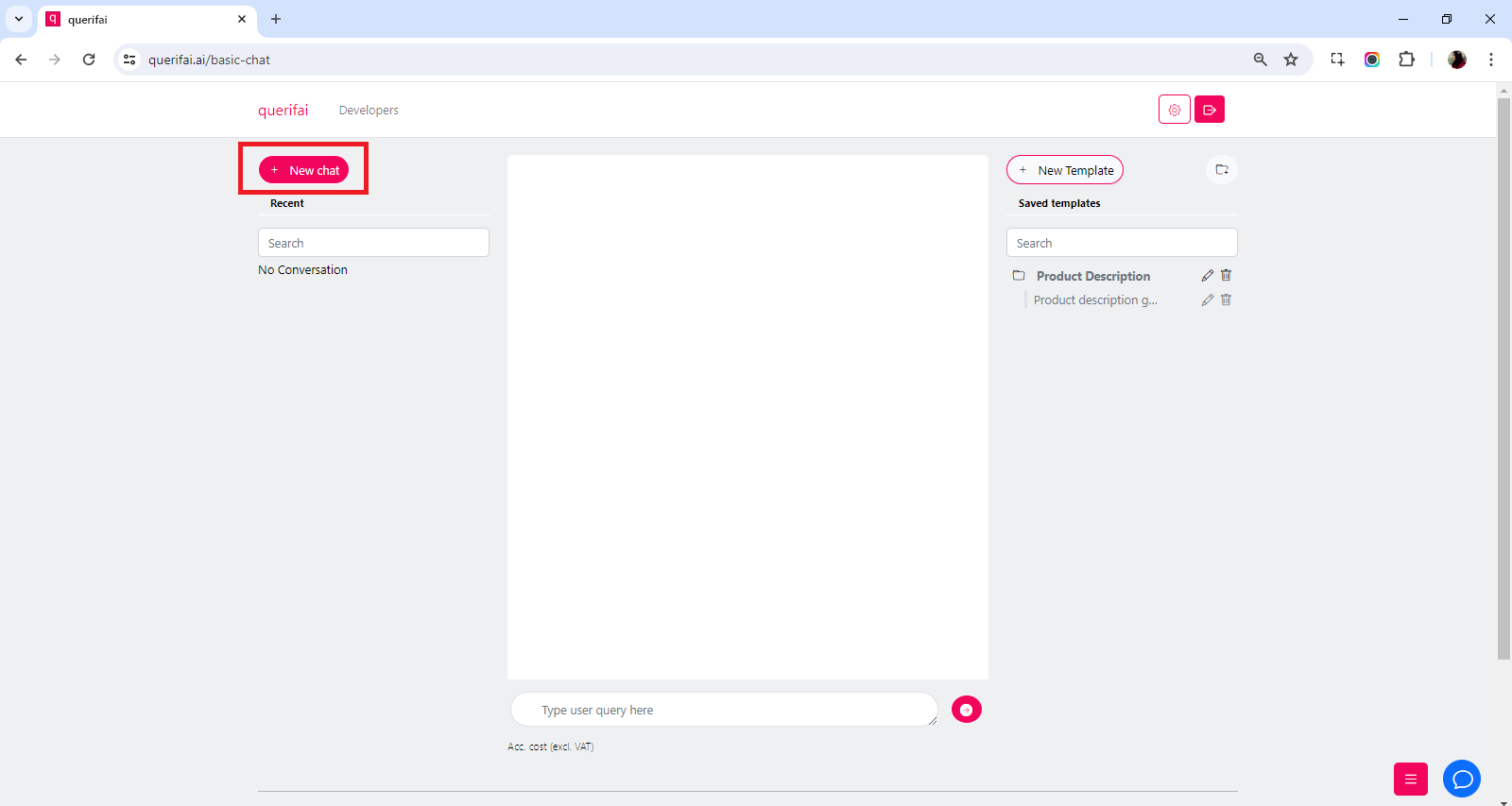

You can use a prompt template in any active chat. Here, we open a new chat by clicking on “New chat”

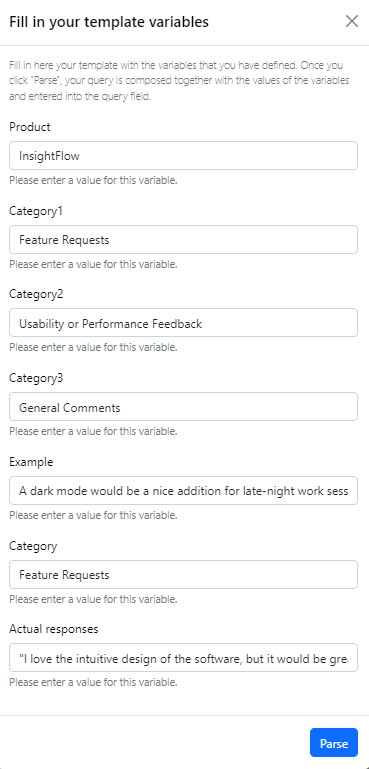

Enter the chat name, select a model and temperature, and adjust the system message if needed. Now click on the saved template. A new screen appears that asks you to fill out the variables you have defined earlier to provide the whole context to the LLM.

Once you are ready, click on “Parse”. The completed prompt now appears in the chat input window. It is prepared but has not been executed. You can still change it manually if required. When ready, hit the arrow, and the prompt will be sent to the LLM you selected earlier. It should give you the answers you expected.

Use-cases for Prompt Templates

Now that we have discussed why and how to use prompt templates, here are some ideas for concrete use cases to get you started:

- Content Creation for LinkedIn: Generate LinkedIn posts on specific topics by defining the variables for topic, tone, format, target audience, etc., ensuring consistency and relevance.

- Competitor Social Media Analysis: Analyze your competitor’s LinkedIn posts to understand their tonality, topic clusters, and formatting, using variables to tailor the analysis to different competitors.

- Product Descriptions: Create detailed and consistent product descriptions for e-commerce sites by defining variables like product name, features, and benefits for products of a similar category, e.g. LED, OLED, LCD etc.

- Customer Support Responses: Generate consistent and personalized responses to common customer queries by defining variables such as customer name, issue type, and resolution steps.

- Job Description Creation: Create comprehensive job descriptions by defining variables like job titles, responsibilities, required qualifications, and company information.

- Educational Content: Develop personalized lesson plans or educational content by specifying variables such as subject, grade level, and learning objectives.

- Health and Fitness Plans: Design personalized fitness or diet plans by specifying variables such as fitness goals, dietary preferences, and workout routines.

- DALL-E Image Generation: Create prompts for generating images with DALL-E by specifying variables such as the subject (e.g., "a sunset over mountains"), style (e.g., "impressionist painting"), and context (e.g., "used in a travel magazine"), ensuring precision and relevance for each image generation task.

Limitations of Prompt Templates

While prompt templates can be very helpful, sometimes the combination of a prompt template with an existing LLM is not enough:

- LLMs have a cut-off point, i.e., they do not have information about anything that happened after the time they were trained or updated. In addition, they typically do not have access to non-public data sources. For these cases, you can try out our “chat with documents” service, where you can upload your proprietary data, select models and ask questions about this data, with or without prompt templates. You can find a description of this here (link to Blog). In case you would rather want to analyze your documents from Excel, you can also use our Excel-add in. Thereby, you can easily compare answers from different data sources, e.g., different vendors responding to a request for a proposal or different versions of your own proposals. (Blog coming soon).

- When using LLMs to categorize large amounts of texts that are somewhat nuanced, outcomes may not always meet expectations. It can help eliminate unnecessary data first – e.g., by reviewing frequent words and eliminating data containing unwanted topics – and by giving the LLM more guidance on remaining data by providing classification examples. We have designed a Language AI for Spreadsheets service that supports this and other use cases with a focus on tabular data (Blog coming soon).

- In some cases, publicly available LLMs are not trained on the task you need and do not provide the results you need, even after applying good, prompt engineering practices. querifai can support you to finetune some existing LLMs on your data and make them available for you within our platform.

Conclusion

In our previous article, we have explained several prompt engineering techniques and provided practical examples. Prompt engineering plays a pivotal role in maximizing the effectiveness of large language models, but it often requires multiple iterations to craft the perfect prompt for a specific task. By using prompt templates via querifai.ai, you can avoid double work by reusing the prompts that worked for you and building your private best practice library.

If you need LLMs to review your documents as input, try out our “Chat with documents” solutions. If you want to interact with tabular data, check out our AI for Spreadsheets service.

About querifai.ai

querifai.ai is a user-friendly SaaS platform powered by AI, designed to cater to both businesses and individuals. With querifai.ai, you can simply select an AI use case, upload your relevant data, select a vendor, and compare the results these vendors generate. Our platform harnesses the power of multiple cloud-based AI services from industry leaders like Google, AWS, and Azure, making it easy for anyone to leverage the benefits of AI technology, regardless of their level of expertise.

If out-of-the-box AI services are not suitable for your use case, we also offer to generate AI-based data products, human-in-the-loop workflows combining AI services with human feedback, and other workflows tailor-made for your situation - directly on querifai.ai.